How Can We Hold Tech Platforms Accountable?

In Their Own Words: Profiles from 2024-2025 Bass Connections Teams

The “In Their Own Words” series features profiles written by members of our 2024-2025 Bass Connections teams that showcase the discoveries, challenges and impact of teams who spent the year tackling real-world problems.

Platform accountability, or the evaluation of how a technology platform (e.g., Etsy, Roblox, Spotify, YouTube) demonstrates secure data management processes, is important to how we assess the impact of a platform on security and society. Legislation and policy must be carefully constructed to monitor and regulate responsible industry behavior. However, experts are divided on the best approach.

The Platform Accountability in Technology Policy team developed a platform accountability framework for how companies can demonstrate that they are behaving responsibly and for governments to measure their behavior more effectively. Team members examined the intersections between business, law and policy to understand platform accountability and the processes for evaluating companies’ compliance to standards.

This team was led by David Hoffman (Public Policy) and Kenneth Rogerson (Public Policy).

By: Members of the Platform Accountability in Technology Policy team

Our team examined the responsibilities of platforms like Etsy, Roblox and Spotify around key themes, such as transparency around compensation and pricing models; use of data profiling for targeted advertisements; disclosure of AI-generated content; flagging mechanisms for harmful or inappropriate content and copyright.

In the fall, team members wrote in-depth analytical reports on Etsy, Roblox, Spotify and YouTube with explorations of their privacy policies, terms of use policies and applicable regulations.

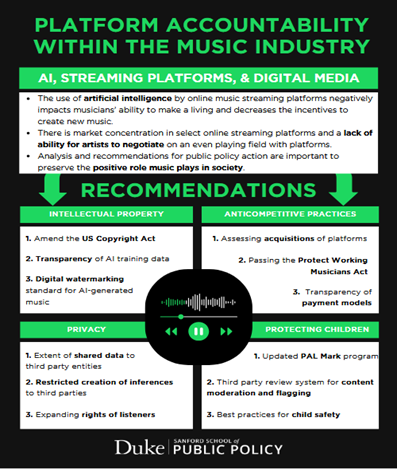

In the spring, the team wrote a white paper examining the impact of AI on the music industry, focusing on policy solutions around intellectual property, anti-competitive practices, privacy and personal data use and protection of children. The team presented research to music industry and advocacy stakeholders in Washington, D.C., in April 2025. Team members also used the content of the white paper to produce additional policy deliverables, including one-pagers, infographics and blog posts.

Our findings on AI in the music industry are summarized below. These findings will serve as a foundation for our 2025-2026 team.

AI in the Music Industry

AI in the Music Industry Technology has brought new methods to create music and reach listeners. However, it has also decreased the ability for musicians to receive just compensation for their creative work.

AI use by online music streaming platforms has the potential to amplify this negative impact on human artists. In some cases, AI is replacing human musicians as algorithms create new music from databases of existing human work.

Music has played an important role in democratic participation and free expression. Analysis and recommendations for public policy action are important to preserve the positive role music plays in society.

Intellectual Property

- It is unclear whether current copyright laws provide intellectual property protection when copyrighted music is used to train AI models to create new music.

- The current system of notice and takedown places burdens listeners and musicians when they are not in a good position to know whether a song is AI generated.

Recommendations

- Amend copyright laws to protect against unauthorized use of training data for AI models.

- Promote transparency by establishing a digital watermarking standard for AI-generated music.

Anti-Competitive Practices

- Market concentration decreases options for musicians and listeners.

- The use of personal data increases market concentration.

- Artists’ inability to collectively bargain decreases their ability to see adequate compensation.

- There is a lack of transparency about how payments and commercial relationships influence the distribution of music via streaming platform algorithms.

Recommendation

- Pass the Protect Working Musicians Act with requirements for AI transparency.

Privacy

- Streaming platforms’ privacy policies reveal the need for transparency regarding how users’ data is collected, shared with third parties, and its influence on monetization mechanisms.

Recommendation

- Develop industry best practices regarding data sharing and giving individuals the right to access, correct, and delete their data.

Protection of Children

- There is a need to protect children’s data, eliminate exposure to age-inappropriate music, and increase parental controls.

Recommendations

- Update the PAL Mark program for the use of AI and target profiling of children.

- Implement a third-party review system for content moderation and flagging.

- Create industry best practices around child safety features for parents.

Learn More

- Read another student- and faculty-authored team profile from the “In Their Own Words” series.

- Explore current and previous Bass Connections teams.

- Learn about the project team experience through stories from students.