Cultures of Collaboration: Managing the Moral AI Lab

April 11, 2018

A 2015 graduate of Grinnell College and a strong believer in the power of interdisciplinary scholarship, Kenzie Doyle is the manager of the Moral Artificial Intelligence (Moral AI) Lab, which is home to the Bass Connections team How to Build Ethics into Robust Artificial Intelligence.

Here, Doyle reflects on her academic and career path, her growing interest in artificial intelligence (AI) research and her plans for the future.

By Kenzie Doyle

I started looking for my first “real” job during the summer of 2016. I graduated from Grinnell College in 2015 with a major in Psychology and many Literature credits, and for a year I had been “thinking” – trying to figure out how I wanted to go about my life. I found a job listing for a lab manager for the Moral Attitudes and Decisions Lab (MADLAB) working for Walter Sinnott-Armstrong. I looked into the lab briefly, submitted an application and moved on with my search.

I started looking for my first “real” job during the summer of 2016. I graduated from Grinnell College in 2015 with a major in Psychology and many Literature credits, and for a year I had been “thinking” – trying to figure out how I wanted to go about my life. I found a job listing for a lab manager for the Moral Attitudes and Decisions Lab (MADLAB) working for Walter Sinnott-Armstrong. I looked into the lab briefly, submitted an application and moved on with my search.

After a couple of months, I had not forgotten about MADLAB. My undergraduate liberal arts experience paired with my difficulty deciding what I wanted to do after graduating was growing into a dedication to finding work in a dynamic, interdisciplinary environment. Grinnell’s emphasis on social justice paired with the ethics-focused literature that I had encountered as an undergraduate sparked my academic interest in morality.

My undergraduate liberal arts experience paired with my difficulty deciding what I wanted to do after graduating was growing into a dedication to finding work in a dynamic, interdisciplinary environment.

I wanted to know more about MADLAB, so I used the massively under-acknowledged skill of cold contacting, and emailed Dr. Sinnott-Armstrong for an informational interview. I gave him my resume and references, chatted about the projects in MADLAB and left feeling more informed but still jobless.

I was not unemployed for long. I received an email from Dr. Sinnott-Armstrong informing me that one of his collaborators, Jana Schaich Borg, was looking for a research assistant for a project in the Moral AI Lab. As AI makes increasingly important decisions in the world, researchers in the Moral AI Lab aim to integrate ethics with artificial intelligence. Though I did not have a background in AI, I eagerly agreed to be interviewed. Dr. Sinnott-Armstrong and Dr. Schaich Borg seemed to like me. I was hired.

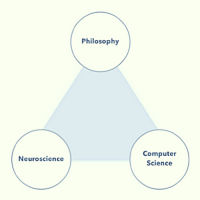

I soon learned that in the Moral AI Lab, I would be working on an experiment related to game theory. We would empirically investigate what influences human behavior in economic exchanges with the hope of being able to code an AI to make morally charged decisions like a human. The group, including impressive students from a range of fields, including computer science, philosophy, neuroscience, economics and law, as well as Dr. Sinnott-Armstrong, Dr. Schaich Borg and Vincent Conitzer, collaborated to fine-tune the experiment before deploying it.

My background in experimental design and psychometrics came in handy. I was able to point out ways in which what we were doing might influence participants’ thought processes, while others in the group identified the necessary information to build decision trees mapping out participants’ behavior. I was exhilarated to find my hopes for the lab fulfilled: the array of backgrounds and experiences people brought to the interdisciplinary lab setting exposed everyone to new ways of thinking.

I was exhilarated to find my hopes for the lab fulfilled: the array of backgrounds and experiences people brought to the interdisciplinary lab setting exposed everyone to new ways of thinking.

As our experiment progressed, I led data collection as the project manager. Part of my job as a psychologist was to observe what the participants thought of the experiment. So far, I have found that, among other things, participants want to get as much money out of us as possible, they can be surprisingly generous with each other and they feel that being forced to parrot another person is a good ice breaker. I brought these observations back to my teammates, which led to some changes to the experiment’s protocol and some hypotheses to test once we had enough data to run statistical and facial recognition analyses.

Last spring, all of the undergraduates working in the group graduated. Because a new group of undergraduate and graduate students were set to join us in the fall through the Bass Connections project How to Build Ethics into Robust Artificial Intelligence, we knew the lab would be bigger, and it was increasingly evident to the project leaders that our group would need a dedicated manager. I appreciated learning from others’ skillsets and I liked managing projects, so I was excited to accept the offer to be the manager of the Moral AI Lab.

Managing the team has allowed me to learn an incredible amount about different approaches to Moral AI. For example, one of the lab’s projects includes developing an online activity in which people review two theoretical patients and decide which of them should receive a needed kidney transplant. I get to observe the development of this project not only from my own perspective but also from the students currently working on its creation. In our group, the philosopher’s insights tend toward engagement, while the computer science-turned-law-student focuses more on policy creation. They present their progress at lab meetings, where the undergraduate student interested in AI’s relationship to medicine can offer her own insights and the statisticians can explain the best format to interpret the results. The lab needs and values all of these viewpoints. They allow every individual in the group to learn alternative perspectives that would not otherwise be acknowledged or understood.

I have increasingly come to see the importance of the Moral AI Lab’s work. It is clear to me that AI is likely to take on a much larger role in our lives in the near future and AI programmers should think about the ethical implications of their decisions. If programmers only talk to other programmers, these conversations are unlikely to happen. However, if developers can collaborate with teams including policy makers, neuroscientists, philosophers and economists, they are much more likely to create better products. Collaboration across disciplines requires more communication than single-discipline groups, but it is worth the effort.

I have increasingly come to see the importance of the Moral AI Lab’s work. It is clear to me that AI is likely to take on a much larger role in our lives in the near future and AI programmers should think about the ethical implications of their decisions. If programmers only talk to other programmers, these conversations are unlikely to happen. However, if developers can collaborate with teams including policy makers, neuroscientists, philosophers and economists, they are much more likely to create better products. Collaboration across disciplines requires more communication than single-discipline groups, but it is worth the effort.

If developers can collaborate with teams including policy makers, neuroscientists, philosophers and economists, they are much more likely to create better products. Collaboration across disciplines requires more communication, but it is worth the effort.

After I leave the Moral AI Lab, I intend to pursue a Ph.D. in Psychology. Based on my work in the lab, I now cannot imagine getting a graduate degree without collaborating with people from a wide variety of fields. My involvement in the group has allowed me not only to develop my professional skillset, but also to understand my values as a researcher and as a person.

Learn More

- Join us at the Bass Connections Showcase on April 18.

- Check out additional Bass Connections in Brain & Society teams.

- Explore the Team Resource Center, which includes Guidelines for Teamwork, a team values document co-authored by Kenzie Doyle.